Crawling describes the automated process in which Search engine bots, also known as crawlers, spiders or web crawlers, systematically search the Internet to discover websites and capture their content. This process is the first and fundamental step for a website to be included in the Search results can appear. Crawlers navigate from one known URL to others by following hyperlinks on the pages visited, thus mapping a huge, interconnected network of websites.

How does the crawling process work?

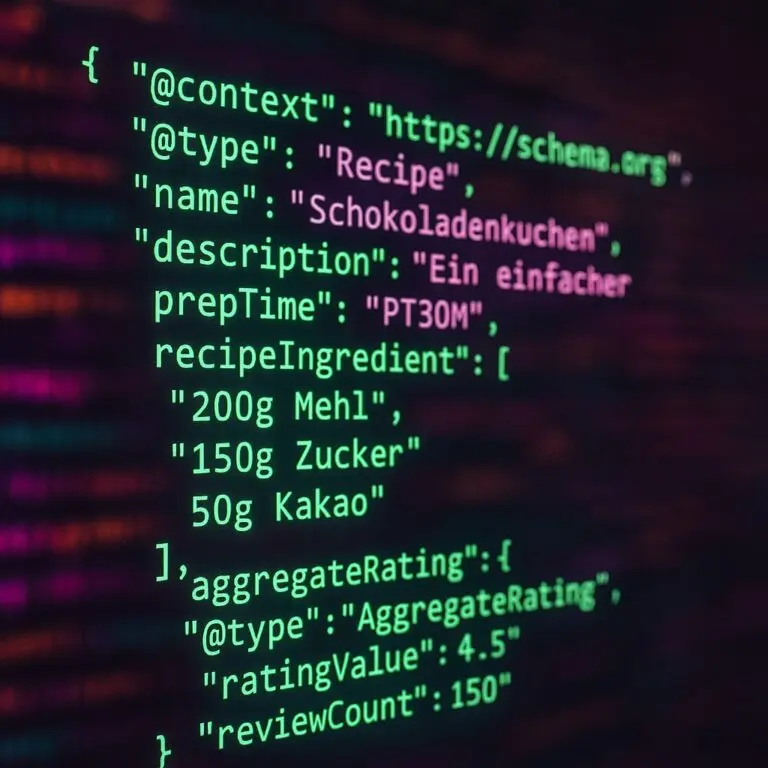

A web crawler starts with a so-called „seed list“ of URLs and retrieves these pages. During this process, it analyzes the HTML code and identifies further internal and external links. The bot then follows this network of links to find new pages that were previously unknown or to recognize changes to pages that have already been recorded. The information collected includes text, images, videos and other file types. This data is transmitted to the search engine's servers, where it is used for further processing - the Indexing - be prepared.

The frequency and intensity with which a crawler visits a website depends on various factors. These include the popularity and topicality of the content, the Loading speed of the website and the stability of the server. Large and frequently updated websites are generally crawled more often than smaller or static pages.

Importance for SEO and control of crawling

For the Search engine optimization (SEO) crawling is of crucial importance, as it is the prerequisite for indexing and thus for the Visibility of a website in the search results. A page that cannot be crawled cannot be included in the index of a search engine and therefore cannot tendrils.

Website operators can specifically control the crawling process to make the work of search engine bots easier and use resources efficiently:

robots.txtThis text file, which is located in the root directory of a website, gives search engine crawlers instructions on which areas of the page they may and may not crawl. This is useful for excluding unnecessary or sensitive content from crawling and thus optimizing the so-called crawl budget.- Sitemap (

sitemap.xml)An XML sitemap is a file that lists all relevant URLs of a website. It serves as a kind of guide for search engines to discover and crawl all important pages quickly and completely. The sitemap can be found in therobots.txt-file or directly in tools such as the Google Search Console be submitted. - Crawl BudgetThe term crawl budget refers to the amount of resources (time and capacity) that a search engine spends on crawling a specific website within a time frame. Efficient use of the crawl budget is particularly important for large websites to ensure that all relevant content is regularly crawled and indexed.

Through the optimization of the technical structure, a clear internal linking and the avoidance of crawling errors, website operators can ensure that their content is Search engines can be correctly recorded and presented in the search results.